Ellen Pearlman is a New York based media artist, curator, writer, critic, and educator. She holds a PhD in Digital Media from the School of Creative Media at Hong Kong City University and was a Fulbright World Learning Specialist in Art, New Media and Technology, and Faculty at Parsons/New School University. Her PhD project, “Is There A Place In Human Consciousness Where Surveillance Cannot Go?” was awarded highest global ranking from Leonardo LABS abstracts in 2018. She is also the Director and Curator of the ThoughtWorks Arts Residency, President of Art-A-Hack™, and Director of the Volumetric Society of New York.

Ellen Pearlman’s Brain Opera Noor is a unique example of multidisciplinary and intercultural STEAM efforts. It also explores and challenges the assumptions of private and safe spaces humans of the twenty-first century may be taking for granted.

Irina Yakubovskaya (IY): What sparked your interest in the interdisciplinary work that trespasses the boundaries of genres, styles, mediums, and bridges sciences, humanities and performing arts?

Ellen Pearlman (EP): I could not find anyone medium that was capable of expressing what I wanted to say, in the way I wanted to say it. It was a question of identity and voice. An artist’s identity is composed of multiple elements. It changes all the time. What you focus on when you are young changes as you mature. I have written an essay about identity where I explore how my identity changes in different circumstances. With 10 dollars I can be poor in one country and rich in another, and I can experience all of it in one same week just by taking an airplane to another country. Once I understood that when I was young, I understood that how other people perceived me was also incredibly fluid depending on the context. I think most young people have no idea about how fluid their life can be when they change their context. Many Americans very clearly don’t.

IY: Your international career is truly remarkable. Having worked, performed, and curated art projects across the globe, did you at any point discover a unifying theme in the various reactions and responses your work was receiving?

EP: I’ve been to over 45 countries, and what I found universal is–artists are the same all over the world.

IY: How do you mean?

EP: It’s the same type of people. Understanding that, it’s very easy for me to go to countries I’d never been to, and immediately find a community of artists. When you have something that is powerful thematically and touches universal themes, I think people around the world recognize it.

IY: Your last project Noor: A Brain Opera combines different genres, from interactive theatre to high-tech telematic performance channeled through the actual brain of the performer. How did your artistic path lead you to such a unique creative endeavor? In the official description of the project, you say that “Noor is a metaphor for issues of surveillance, privacy, and consciousness.” What is the importance of Noor as a metaphor for undecipherable human mind? Why did you center the story around this particular historical figure?

EP: Her [protagonist’s] code name was Nora. It is a fascinating story. When I was developing an idea for a Brain Opera, I realized very clearly that story is everything. Otherwise, you have these odd performances no one can understand. I understood very clearly that the story would come to me. When I found out about Noor Inayat-Khan, whose father brought Sufism to the West, what shocked me most was that she was a Muslim woman who died at the hands of Gestapo for being a secret agent for the Allies. I had no idea that she even existed. Considering all the conflicts in the world, I thought it was very important to highlight the fact that a Muslim woman had done this during WWII to help stop the rise of the Nazis. I thought that was an important story to be told. Also, the Gestapo could not break her when they captured her because of the issues of her faith as a Sufi. Surveillance could not go somewhere because she had such faith–she could not be broken and made to give up her fellow underground cohorts. I found that to be an unspoken rebuttal or answer to “is there a place in human consciousness where surveillance cannot go.” But it wasn’t didactic, but subtle.

IY: So, is there a place in human consciousness that is unattainable?

EP: Ultimately, it’s the question of perception, and how trained someone is in the nature of their own mind. I think people who believe in their projections are more susceptible than people who have space between their projections and themselves.

IY: Are parts of the brain hackable?

EP: There is technology [for it] already. Openwater is the company that works on creating an equivalent of FMRI but the size of a head. They work with very specifics kind of optics. Once that happens, it will be very easy to decode imagery inside the brain using the mapping of the brain called the Semantic Brain (which is from Jack Gallant’s lab at the University of California, Berkeley). Overall, it’s a question of available technologies, who controls them, and how they are commercialized.

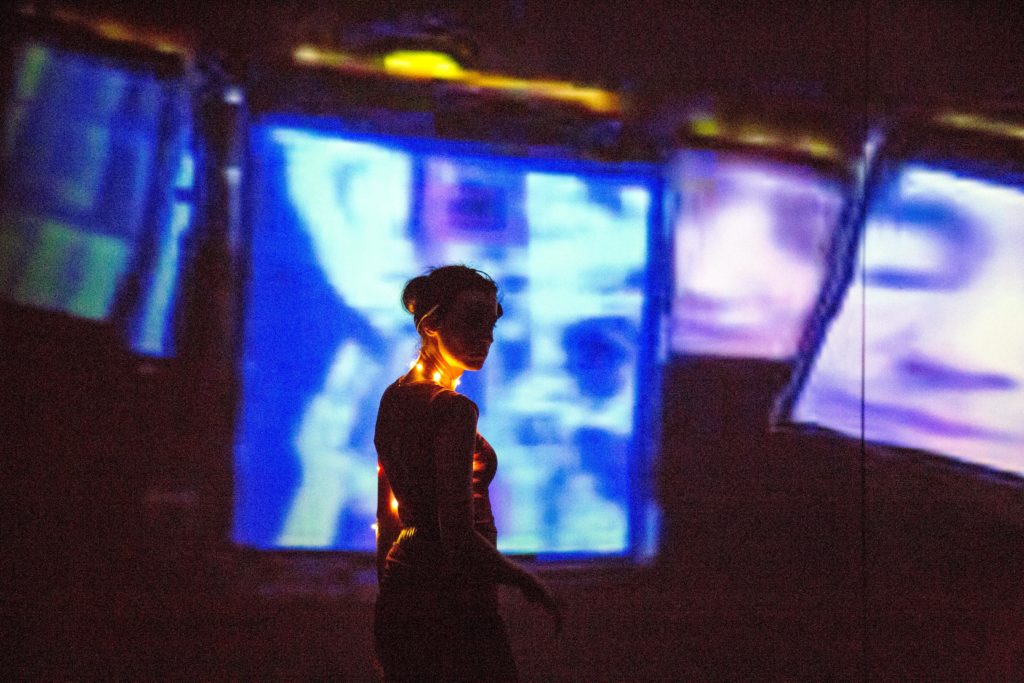

When I made the Brain Opera, I used the four emotions that could be measured by the Emotiv brainwave headset: interest, excitement, frustration, and meditation. Those are four that are measured with about 84% accuracy, which for an art performance is just fine, but for the medical research is questionable. As the performer was interacting with the audience through gaze, touch, and movement in live time, on one of these five screens in the 360-degree theater the audience could watch the performer’s brain waves, live. This allowed monitoring cognitive processes by the audience as well as the performer herself. It was a 360 theatre, she could see which of the brainwaves were active in her own mind. It created a live time feedback loop between the audience and the performer without any pedantic interventions of forcing the audience to interact. It happened very naturally. As the audience watched the performer’s brain waves, the performer watched the audience and interacted with them, which changed the brain waves as the story about Noor was being narrated, which changed the visuals, which also changed the sounds. It was a living organism, or a live time biometric feedback loop.

In the new Brain Opera, I am working, I have already prototyped a body of light that is hooked up to brain waves. I just need to find someone to build and fund it. In the new Brain Opera that also uses emotional intelligence, artificial intelligent analysis, the brain waves, the body of the performer, and the body of the emotionally intelligent entity are all going to be in a live time feedback loop that everyone can watch.

IY: I feel like the public would not like the idea of sending and interpreting the data directly from the brain.

EP: This is biometric monitoring. With Fitbit, for example, you’re sending your biometrics to a computer cloud, and you can show it to other people. No one thinks twice about it. I am also the director of ThoughtWorks Arts Residency. We work with artists in tech, we work with cyborgs, biometrics, and VR, we worked with illegal genetic data harvesting, we’ve worked with facial recognition and AI, and robotics. In terms of facial recognition, the eye gaze and artificial intelligence, that’s another biometric thing in addition to brain waves. Both facial recognition and gaze tracking are already happening–is that the brain, well yes, ultimately. The input is the brain, but the output is the face or the eye/iris. It is already here. It is just a matter of degree, and control, and what’s it being used for.

IY: What is the title of the new Brain Opera?

EP: It is called AIBO–Artificial Intelligence Brain Opera. It is going to have two characters instead of one. Semantic analysis (analysis of the emotional state of someone’s speech) is in a very baby state of development. I’ve been developing characters for both the emotional intelligence entity and the human entity because they will interact. Specifics about how I do this will emerge depending on other resources, like funding. I already have a prototype of a database for the emotional entity, and I‘ve already prototyped linking brain waves to semantic analysis live time in a performer, which shows up as a body of light on the performer’s body. It’s worked, I’ve done it, and now I just need to bring it to the next level. Our speech is going to be analyzed for emotional content, and it’s going to be tied to our biometrics. This is already happening in certain virtual medical analytic tools. In other words, the medicine of the future is going to be between a doctor and a patient online and the camera will be monitoring the patient and the doctor will see facial and iris analysis, blood flow, live time. This is already in prototypes. What does that mean when it’s not used for medicine? Just like Facebook, it is great or terrible, depending. I’ve been talking to non-profit organizations very seriously about legislation and ethics to deal with this new type of monitoring. Climate change is very important and critical right now, but so is this. People are more active about climate change then they are about biometric monitoring, and brain and cognition monitoring, so it’s less well understood. It is not just brain, it’s eye gaze, blood flow, breath, all of it is for grabs.

Photo and copyright Ellen Pearlman

IY: Telematic performance has been around for decades. In the 21st century, it is truly in sync with the current trends in technology; however, it is yet to enter the mainstream of the theatre world. What is the reason for this, in your opinion, and do you expect the telematic mode/style to enter a mainstream world of performing arts anytime soon?

EP: I have a very clear explanation on why it’s used or not used. First of all, the internet as we know it, like what we’re talking on right now, is on what’s called protocol IPv4. That’s the standard protocol that is used worldwide. And it’s slow. Telematics actually is set up for IPv6 which is owned by military and research institutions. It is private, it’s a bigger wire, and there are very few technologies that can compress for IPv6. Unfortunately, there is no video compression algorithm for IPv6, it used the standard video compression that everyone uses. Therefore, you have an enormous tunnel with only a piece of thread for video. There is not enough software written for it, and there is absolutely no way at this point to compress video to take advantage of the enormous bandwidth. There are realistic, pragmatic and technical reasons: who owns it (research networks, for the most part), who writes software for it, and whether it could be commercialized. It can’t [be commercialized], because it’s a research network.

IY: Do you expect it to change in the future?

EP: Not unless governments of the world are willing to invest billions of dollars in IPv6, which doesn’t seem to be happening. When I worked in Canada and Hong Kong, and then came back to NYC and tried doing telematic performance, I ran into problems with Time Warner Cable. They had access to the bandwidth of high speed. In America, I ran into capitalism. It depends on who the governing bodies are of the telematic networks, and each one is controlled by organizations who allocate or don’t allocate. I hit a wall in New York–nobody really cared about working in telematics. Generally, it’s university-based, and for creative practice, it’s presented as a linked live time music concert. It’s not being used in its full potential in the least. They don’t use the interactivity that I would like to develop. I’m not pessimistic, I just think it’s not going to be pushed in any way by any programs anytime soon. When I was in Canada, they created a Canada Research chair position for it, and invested 2 million dollars in Telematics lab. Unfortunately, due to university politics that lab fell apart.

IY: Do you think other technology could function similarly to the telematics?

EP: Yes: VR, AR, and volumetric filmmaking. Volumetric filmmaking is depth filmmaking, it’s using a Kinect sensor. There’s the X, Y, and Z coordinates, X is the width, Y is height and Z is depth. There’s a lot of work being done now in this area. I’ve been working with Volumetric film since 2013, and it has jumped in its technical abilities. Very soon within the next 6 months live time volumetric streaming will be capable of going into VR and very soon AR, and that will change things. I am actively working with people who are developing it right now.

IY: There will always be some form of pushback from those who are more traditional about how art should be performed and recorded.

EP: There is Virtual Reality, Augmented Reality, and Mixed Reality. I personally work in Mixed Reality. The volumetric performance will be allowed to live-stream live performances inside VR. I’ve seen Hamlet at the Tribeca film festival 2017. In it, four people at a time would put on VR headsets in Manhattan, and actors in a motion capture studio in Brooklyn would play Hamlet. Their movement would be displayed as characters talking [and acting] in VR, and you, the audience member could walk around with characters. The live experience of technology is here already, and there’s absolutely no way of stopping it. There can be as much pushback as people want, it’s not going to stop it. There is no way of stopping railroads, industrialization to an agrarian society, and you can’t stop this technological wave, it’s impossible.

IY: Could these technologies ultimately provide a platform for a shared global empathy?

EP: This is what I call a Facebook question. I think these technologies will highlight and augment what already exists.

IY: Do you think technology breaks human connections?

EP: No. I think it widens the connection, but on the other hand what concerns me is the addiction aspect of it. There is an issue in expansion and addiction.

IY: Do you address it in your work?

EP: No. I think my work is much more concerned with what’s coming instead of what is.

I am much more interested in what I see that will happen after I’m dead than what I’m looking at the moment. I think that’s where my work shifts perspectives. For the Brain Opera, my interest in surveillance of consciousness and dream states is based on very substantial readings on my part, a thousand plus journal papers on neuroscience. The work I’m doing now is on Emotional Intelligence and Artificial Intelligence. The piece I’m working on now asks very simple questions: can an AI be fascist, and can AI have epigenetic memory, which means inherited trauma.

IY: This blows my mind.

EP: It blew my mind also when I found out what kind of research is being done in the sciences.

IY: Could you give a glimpse?

EP: Sure. In 2012 the Obama administration started the Obama Brain Initiative, which gave hundreds of millions of dollars over 10 years to three agencies: 25% of the money went to the NIH (health), 25% to the NIS (science), and 50% of the money went to DARPA (the defense department). DARPA has a sub-agency caller IARPA, which is made up of former CIA and other agencies that are morphed into a new agency. The major reason they morphed is brain research. The EU has a brain research initiative, Australia, Israel, Japan, Canada, China all have one. Facebook poached some of the best labs in neuroscience and got those researchers into their secret building, Facebook Building 8. They’ve also hired former directors of DARPA. Elon Musk is trying to do the same. He also hired a former DARPA director. Facebook built SARA initiatives, which are special research agreements with 17 research universities, mostly around issues of brain science. I’ve been following up on that on my Tumblr blog. Very soon the images you see in your dreams will be decoded. It is already being done, and I’ve blogged extensively about it, with links. After doing all that research I realized that being able to see what you see in your brain or your dreams is actually going to become very soon not only a reality but a common occurrence. Understanding that, I realized as a visual artist that there was no way I could handle this subject: it was operatic in proportions. Therefore, I worked with a team of seven in three countries (New York, Hong Kong, St. Petersburg, Russia) to put together a Brain Opera using the brainwave technology, because I saw it coming.

Photo Vincent Mak., c. Ellen Pearlman

Ellen Pearlman has published extensively on the past, present, and future of the brain research, technology, history, arts, and mixed media.

The following articles by Pearlman provide a detailed analysis of various topics that are addressed in the interview above:

Pearlman, Ellen. “I, Cyborg.” PAJ: A Journal of Performance and Art 37, no. 2 (2015): 84-90.

Pearlman, Ellen. “Brain Opera: Exploring Surveillance in 360-degree Immersive Theatre.” PAJ: A Journal of Performance and Art 39, no. 2 (2017): 79-85.

Ellen Pearlman (2015) The brain as site-specific surveillant performative space, International Journal of Performance Arts and Digital Media, 11:2, 219-234, DOI: 10.1080/14794713.2015.1084810

The best way to contact Ellen Pearlman regarding research inquiries is through the Noor Facebook page.

This post was written by the author in their personal capacity.The opinions expressed in this article are the author’s own and do not reflect the view of The Theatre Times, their staff or collaborators.

This post was written by Irina Yakubovskaya.

The views expressed here belong to the author and do not necessarily reflect our views and opinions.