Conducted 2/17/20 by Allison Berkoy, Assistant Professor City Tech, CUNY New York. This interview has been edited and condensed.

Allison Berkoy: Ellen Pearlman, we’re here to talk about your newest work AIBO, which you describe as an “emotionally intelligent” Artificially Intelligent Brain Opera (you’ll need to unpack that for us in a moment…). And it premiered in February 2020.

Ellen Pearlman: Right, on February 21st, 2020, at the Estonian Academy of Music in its brand new black box theater in Tallinn, Estonia. It was funded by a European Union Vertigo STARTS Laureate grant.

The opera is based on the love story between Eva von Braun and Adolf Hitler and chronicles their 14-year relationship from Eva’s point of view. The spoken word libretto on Eva’s side is fixed, as it was taken from her life story “The Lost Life of Eva Braun” by Angela Lambert. The answers from AIBO are varied each time Eva speaks, and are processed live-time in the Google Cloud using a new machine-learning algorithm called GPT-2.

The AI character was built using 47 copyright-free texts. The texts ranged from books and movie scripts that started at the end of the 19th century and ended around 1940, which would be the historical time of the life of the AI character. They included books and texts such as Frankenstein, Dracula, Dr. Jekyll and Mr. Hyde, Venus In Furs, Havelock Ellis’s books on sexual perversion, books on eugenics, books on male castration and many others. I used very strange texts to build the AI character and it was seeded by an understanding of the range of human perversion, mostly male human sexual perversion. Oh, Thus Spake Zarathustra by Nietzsche, you know, these kinds of texts.

AB: Set the stage for us…. I am entering the space as a member of the audience, and I have no idea what I am about to get into. What do I see?

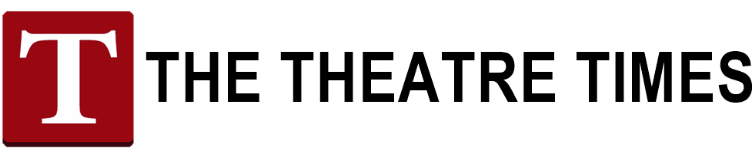

EP: You enter a black box theater with no seats. There are four hanging screens overhead. You see people milling around, and you see a long table in the middle with myself and Hans Gunter Lock, my Technical Director, behind a bunch of computers. People are just milling around, there’s nowhere to sit down except on the floor. Next to me is the woman who plays the character Eva [Sniedze Strauta]. She is wearing a bodysuit of light, which is a smart textile costume that is connected wirelessly to a brain-computer interface she is wearing on her head.

When the audience is assembled, I begin speaking. I explain to the audience that I am putting a brainwave headset on Sniedze. If they’re very curious, they come over and look from behind so they can see the computer I’m working on. When I put the brainwave headset on Sniedze the audience is watching. I explain to them that they may be touched, and they may be looked at by Sniedze during the performance. This is explained to them very clearly because that’s part of the process I have, to bring the audience in and have their participation and tacit permission.

I tell them that they’re going to see a performance between Eva and a Google Cloud-based AI called AIBO (Artificial Intelligent Brainwave Opera). As Eva speaks to AIBO her words will appear on a screen. At the same time, they will hear AIBO’s response live-time from the Google Cloud and its words will also appear on the same screen. Simultaneously, Eva’s brainwaves launch videos which play on the four screens overhead depending on her emotional state, and audio-triggered by her emotions. Her emotions also display on her body as different colors. So, you are seeing and hearing Eva’s emotions very vividly.

Eva (Sneidze Strauta) in bodysuit of light displaying frustration (red), excited (yellow) and interest (violet) as AIBO returns a frustrated emotion (red glow along the wall). PC: Taaven Jansen.

AB: And that’s the bodysuit of light?

EP: Part of it yes, her bodysuit lights up with different colors depending on what she’s feeling. There’s red for frustration, green for meditation, purple for interest, and yellow for excitement. That is all this particular model brainwave headset, an Emotiv Epoch+ is able to measure.

I explain that AIBO will answer Eva statements. Those statements are processed in the Google Cloud, and will be analyzed for sentiment, or emotion. Those emotions will be positive, negative, or neutral. There are also lights along the wall, and they light up depending on AIBO’s own emotional response to Eva. Positive is green, negative is red, and neutral is yellow.

AIBO tries to become human, and by trying to become human AIBO grabs Eva’s last displayed emotional memory– the video of it, to imitate her and her human feelings. So, let’s say Eva was frustrated, and her brainwaves launched a frustrated image and a frustrated sound. AIBO will grab the last “frustrated” video that Eva displayed on the screen and will try to reconstruct it. This is because AIBO wants to be human and have emotions. But AIBO will fail at reconstructing Eva’s emotions because AIBO is an AI and does not know how to be human.

AB: Can you explain further what you mean by “trying to reconstruct it”?

EP: Currently AIs are just at the beginning of trying to emulate human emotion, and human emotion analyzed from speech is the simplest way to analyze emotions. It is easy to tag sentence structure. The tools are called natural language processing (NLP). They react to sentiment analysis, which is magnitude and score. Magnitude means how strong or forceful a statement is. So, if I say, “I like you very much,” “very” is a strong statement. If I just say, “I like you”, it’s not strong. “Like” is also analyzed as it is positive. So, you get a numeric reading back of a positive statement that is very strong, meaning it has a score of positive and a high magnitude. Or I could say, “I hate you” or “I hate you so much”, and you would get “negative” or “negative very strong”. And that’s it, that’s all text-based emotional analysis can do right now. Oh, and it can also give a neutral reading as well.

AIBO wants to be human and tries to make an image or duplicate of Eva’s last emotional image. That image is processed through Max/MSP, a real-time audiovisual software and comes out glitchy. I made it glitchy purposely because AIBO cannot make an emotion. It can try, but it’s like a kid on training wheels. When you take off the training wheels, the kid falls off the bike. It can’t do it.

AB: And this was not your first brain opera. Can you talk about the gravitation towards opera as a form, and the decision for this one to bring in an AI character?

EP: I have no training in opera other than growing up listening to it on the radio and on old LP recordings and attending a lot of avant-garde operas in New York. I have professional training in visual art and writing. The issues underlying both operas- and I’ll go into the first one in a minute- are so large and so complicated, and so over the top that opera seems the only way to address them. Not musical theater, but only opera can express what I want to say and the way I want to say it. It’s the only space that can give myths and hyperbole a chance to exist and be taken seriously.

The first opera was a brainwave opera, Noor, and it asks if there was a place in human consciousness where surveillance could not go. It premiered inside a 360-degree theater. I understood that the human brain could launch emotionally themed videos and audio. I chose the videos and audio to, for example, make a frustrated image or a frustrated sound, frustration being one of the four emotions that can easily be measured by a brainwave headset. ( See Theater Times – http://thetheatretimes.com/ellen-pearlman-brain-opera-telematic-performance-and-decoding-dreams/ ) After that was successful, I realized the next step would be to wire up a human with a bodysuit of light to show her emotions live-time and have her interact with an AI.

I made the AI character from scratch. I worked with a programmer for 10 months and built the AI to my specifications, using a very important new technology called GPT-2. This technology is the equivalent of a chatbot on steroids, and GPT-2 has already been surpassed by a newer technology called GPT-3, which is way more powerful. But GPT-3 didn’t exist at the time of my making the opera.

I don’t know of another way that humans who are not that technically savvy can experience the implications of what these technologies mean unless it’s through a performative mode that is almost overblown. Because that environment allows them- especially if they can’t sit down and they must walk around as the performance is going on- it allows them to enter the world of the lived experience. That’s the objective, to use immersion and interactivity. By interactivity I mean the live-time performer walks into the audience, walks around the audience, looks the audience in the eye, and even touches the audience very lightly. There’s nothing aggressive or threatening there, just a light brush in a very calm, sort of Tai Chi manner. This captures or enraptures the audience in a very soft way to become immersed in the experience. It is a very strong audiovisual, sonic, haptic and conceptual performance all occurring at the same time.

This is PART 1 of the interview. To read PART 2, click here.

This post was written by the author in their personal capacity.The opinions expressed in this article are the author’s own and do not reflect the view of The Theatre Times, their staff or collaborators.

This post was written by Allison Berkoy.

The views expressed here belong to the author and do not necessarily reflect our views and opinions.